- SkillsPyTorch, Keras, Scikit-learn, TensorFlow, Seaborn, NLTK

- CodeGitHub Link

- ProgressCompleted

This project was completed under the supervision of NLP experts at Northeastern University. The goal was to compare the performance of three different models on a Kaggle dataset that provided text data and emotional labels.

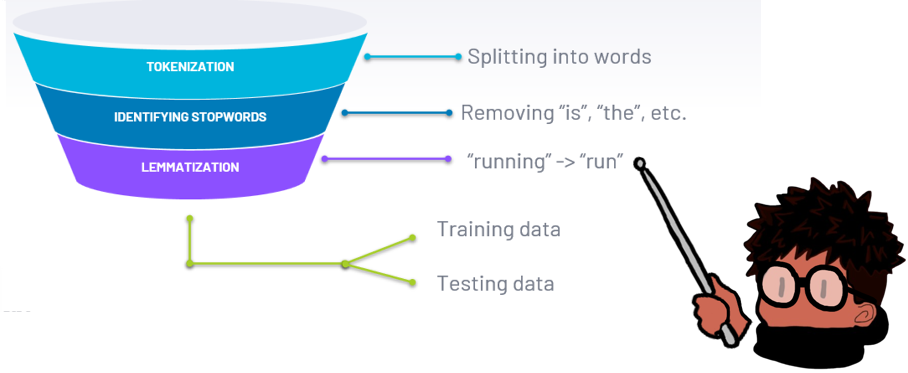

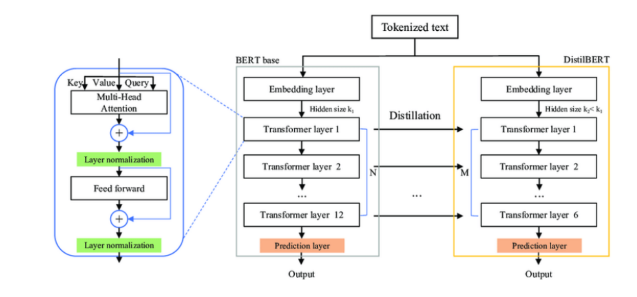

The models used in the experiment were Logistic Regression, LSTM, and a DistilBERT transformer model. I prepared the data and created training, testing, and validation dataset. The three models performed well, resulting in accuracies of 87%, 92%, and 93% respectively, with the DistilBERT model showing the most promise (likely due to its self-attention mechanism).